Has AI Gone Too Far?

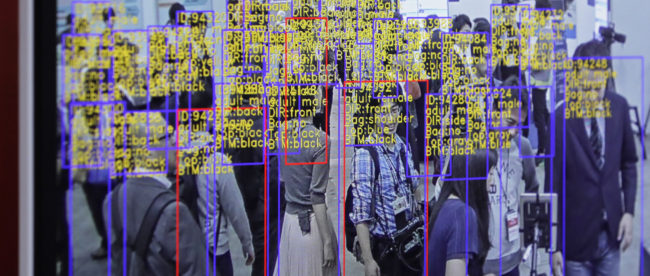

The object detection and tracking technology developed by SenseTime Group Ltd. is displayed on a screen at the Artificial Intelligence Exhibition & Conference in Tokyo, Japan, on Wednesday, April 4, 2018. Photographer: Kiyoshi Ota/Bloomberg

The object detection and tracking technology developed by SenseTime Group Ltd. is displayed on a screen at the Artificial Intelligence Exhibition & Conference in Tokyo, Japan, on Wednesday, April 4, 2018. Photographer: Kiyoshi Ota/Bloomberg

By Dmitry Pleshkov

Artificial intelligence has come a long way in the last two decades. Self-aware machines like the androids from Blade Runner are still, fortunately, fictional — but failure to replicate human intelligence does not make the technologies we’ve created in real life any less impressive. Modern AI can perform tasks such as quickly recognizing faces in images, driving cars, and even things no human can accomplish, like convincingly faking anyone’s voice. As is the case with most powerful technologies, unfortunately, AI has great potential to be used for evil as well as good.

The Good

The main focus of this article is to describe the more sinister applications for AI, but for the purpose of contrast, it’s worth listing a few ways AI has been applied in modern society that are objectively good.

Healthcare

AI can be used to quickly analyze medical images, accurately detecting diseases while also taking into consideration past records. Such a diagnosis would be less prone to bias than, say, a doctor who discounts a patient’s severe pain as an overreaction. AI can also speed up the creation of new drugs by predicting the outcome of microscopic processes like protein folding and molecular binding.

Navigation

Taking data such as street layouts, speed limits, and past traffic data allows for an AI model to plot the fastest route from point A to point B and give an accurate ETA. For example, Google Maps.

Transportation

Self-driving cars are probably the first example that comes to mind when you hear “AI” and “transportation” in the same sentence, but AI has its uses in contemporary human-driven cars as well. For instance, an AI model can be trained to accurately detect when a collision is about to occur and apply emergency brakes. A model can also be trained to learn a driver’s habits, namely how aggressively they press on the gas pedal, and give accurate fuel estimates based on that data.

The Bad

Usage Analytics

Usage analytics is the most prominent example of AI being used in a subjectively bad way. Ever wonder why Google, Facebook, and a plethora of other websites are free to use? Being paid to show advertisements to you plays a big part in financially supporting these services. The majority of the revenue, however, comes from collecting, analyzing, and selling user data to advertisers, who will then serve ads specifically targeted to you, increasing the likelihood of you buying a product. Feeding the AI with more data only makes its predictions more accurate.

Now, this practice isn’t inherently bad — after all, I’m sure most people would rather receive targeted ads than pay to use a service like Google. However, there’s a point after which the data collection is considered too intrusive. Most people would object to such a service eavesdropping on your conversations through your microphone but would be fine with being anonymously sorted into interest groups based on what they search on Google, for example. Anonymity is usually what it comes down to — I don’t mind some service targeting ads at me so long as the ads are targeted at some interest group I’m part of and not to my identity specifically. A good analogy is junk mail — it’s far creepier to receive a junk letter addressed specifically to you and hand-signed by the sender than it is to receive a printed letter that was likely sent to millions of others.

With a lack of anonymity comes a rather hairy problem that boils down to the fact that everyone is unique in terms of behavior. Tying one’s usage data to their identity allows for a rather dystopian tracking system to be built. Imagine this: you’re on the run from an authoritarian government. You’ve gotten surgery to change your facial appearance and voice, and an entirely new identity to prevent the regime from tracking you down. You get to a computer and start typing. Suddenly alarms flash and police sirens sound. You didn’t log into any account, your body is indistinguishable from your old self, but one thing remained: your behavior. Knowing your past actions and habits, the system was able to narrow you down based simply on how you type: factors such as keys you struggle typing, timings in between keystrokes, and words per minute. Of course, realistically a recording of keystrokes would not be enough to track a person down, but there is only so much data that needs to be collected until the trend becomes unique to only you.

Facial Recognition

Similar to the behavioral identification system described above, facial recognition is a simpler AI-powered technology governments can use to track people down. Facial recognition is, simply put, the ability of a computer program to identify human faces given an image. Unlike the system above, facial recognition technology already exists and is unfortunately already being used by totalitarian regimes — namely, the Chinese Communist Party (CCP).

Hong Kong, a former British colony, is a special administrative region of China that exercises relative autonomy from the rest of the nation, with rights like freedom of speech and the press outlined in the constitution known as Hong Kong Basic Law. Recent actions by the CCP have infringed on those freedoms, however, resulting in an ongoing wave of protests.

Hong Kong police have utilized facial recognition paired with CCTV cameras to track down protesters, resulting in many wearing masks to prevent being tracked, and subsequent prohibition of face coverings — something that twenty years ago would be a prime plot for a cyberpunk dystopian novel. Whether or not the use of facial recognition to track down potential criminals is ethical remains a matter for debate.

Deepfakes

Lying is natural human behavior. As such, we as humans tend to take most information in with a grain of salt — for instance, if I heard someone tell me that the moon was made of cheese I wouldn’t believe them unless they provided concrete evidence to back up their argument.

The problem with evidence, though, is that it can be faked. Eyewitnesses can be told to lie, documents can be forged, and even photos can be doctored. But until recently, one form of evidence has been extremely trustworthy — video footage.

Suppose for example that I am trying to fake a video and audio recording of the president saying he hates ice cream. Before the age of computers, such a fake would require me to hire trained impersonators to either create an entirely new fake video or edit existing footage. The latter option would be made even more difficult by the fact that one would need to edit each frame individually by hand. Going through this process in most cases wouldn’t be worth it as it would take several days to fabricate such evidence.

AI makes this process much easier. Free open-source software exists that not only allows one to do things like synthesizing someone’s voice and facial expression, but can also be run on any modern computer — allowing anyone with a half-decent computer to create such “deepfakes”.

So, how would I fake the president saying he hates ice cream? First I would find some footage of him speaking — a press conference, for example. Then, I would use a well-trained voice synthesizer model to create a convincing audio track of him saying he hates ice cream. Finally, I would use a facial expression synthesizer to make the facial expression look as if the president truly is saying he hates ice cream. If this sounds simple, it’s because it is — while a significant amount of programming knowledge is needed to make these AI’s, using them does not require much.

With deepfakes arises the question: what can you trust? Now, nearly every single medium of information can be tampered with — documents can be forged, images can be edited, and videos and audio footage can be deepfaked. Fortunately, at the moment, most deepfakes can be recognized if you look closely — facial expressions that are too still, monotone voices, etc. As AI technology progresses and models can be trained far quicker, the gap between deepfakes and reality will likely close.

What Happens Next?

With powerful technologies like this, regulation tends to take a couple of years to catch up. We will likely see new laws passed in the near future regulating deepfakes and data collection — some already have been, such as California’s Assembly Bill 1215, a 3-year moratorium on police using cameras for facial recognition, and California’s Consumer Privacy Act, a statute giving consumers the rights to know and delete personal information businesses collect, opt-out of the sale of their personal information, and not be discriminated against for exercising those rights. Deepfakes as a matter of national security have also been addressed in the National Defense Authorization Act for Fiscal Year 2020. It is only reasonable to assume more legislation on this matter will follow.

Really fascinating!